CHI'21 Course: An Introduction to Intelligent User Interfaces

This course is held by Albrecht Schmidt (LMU Munich), Sven Mayer (LMU Munich), and Daniel Buschek (University of Bayreuth).

Recent advancements in artificial intelligence (AI) create new opportunities for implementing a wide range of intelligent user interfaces. Speech-based interfaces, chatbots, visual recognition of users and objects, recommender systems, and adaptive user interfaces are examples that have majored over the last 10 years due to new approaches in machine learning (ML). Modern ML-techniques outperform in many domains of previous approaches and enable new applications. Today, it is possible to run models efficiently on various devices, including PCs, smartphones, and embedded systems. Leveraging the potential of artificial intelligence and combining them with human-computer interaction approaches allows developing intelligent user interfaces supporting users better than ever before. This course introduces participants to terms and concepts relevant in AI and ML. Using examples and application scenarios, we practically show how intelligent user interfaces can be designed and implemented. In particular, we look at how to create optimized keyboards, use natural language processing for text and speech-based interaction, and how to implement a recommender system for movies. Thus, this course aims to introduce participants to a set of machine learning tools that will enable them to build their own intelligent user interfaces. This course will include video based lectures to introduce concepts and algorithms supported by practical and interactive exercises using python notebooks.

Part 01: Overview of AI and ML Terms, Concepts and Tools

This is the basic module of this course. We discuss what intelligent user interfaces are and how they relate to HCI as well as to AI. We introduce basic terms and concepts in Machine Learning and AI, thus, providing the foundation for the other slots. In particular, this introduction and overview focus on examples that \textit{combine} topics from HCI and AI for intelligent user interfaces: Concretely, simple algorithms are used first to communicate AI basics and fundamental aspects and challenges of their integration into UIs. This is then complemented by exploring further state-of-the-art methods and pointers to relevant libraries for using them in one's own research and practical projects. All course participants are expected to attend this first module. Thereafter, we suggest participants choose between the other modules based on their interests.

Part 02: Recommender Systems and Adaptive UIs

In this module, we first look at challenges that arise from interaction with massive amounts of information. In many domains, the number of choices is too large to let the users decide. Examples are online stores with thousands (or millions) of items for sale. Algorithms are needed to narrow down what the user should look at. Similar challenges arise in music and video streaming or content presentation in social networks or news portals. We look at different approaches, including collaborative filtering, content-based filtering, and filtering based on contextual and demographic factors. We explain how the basic algorithms work and show a practical example of how to create a movie recommendation system. In the course, we also discuss how the information required for recommender systems can be acquired implicitly and explicitly. This is complemented by discussions on how such approaches can become the basis for adaptive user interfaces.

Part 03: Natural Language Processing and Bots

The primary interaction between people is using natural language -- be it in spoken or written form. When interacting with computer systems and data, using natural language offers many new opportunities, for example, as an alternative modality or in the context of a multimodal UI. In this session, we examine where natural language processing can be used to improve human-computer interaction. We further discuss the main challenges when moving from a graphical user interface to a text or speech-based interaction model. First, we look at basic approaches of processing natural language text (e.g., tokenization, normalization, lemmatization, named-entity recognition). We then discuss and explain the usage of algorithms that can discover topics and concepts in texts, summarizing texts (to different lengths), and identify the sentiment of a text or phrase. We conclude this by exploring chatbots and their applications. In the practical session, we introduce different libraries and toolboxes.

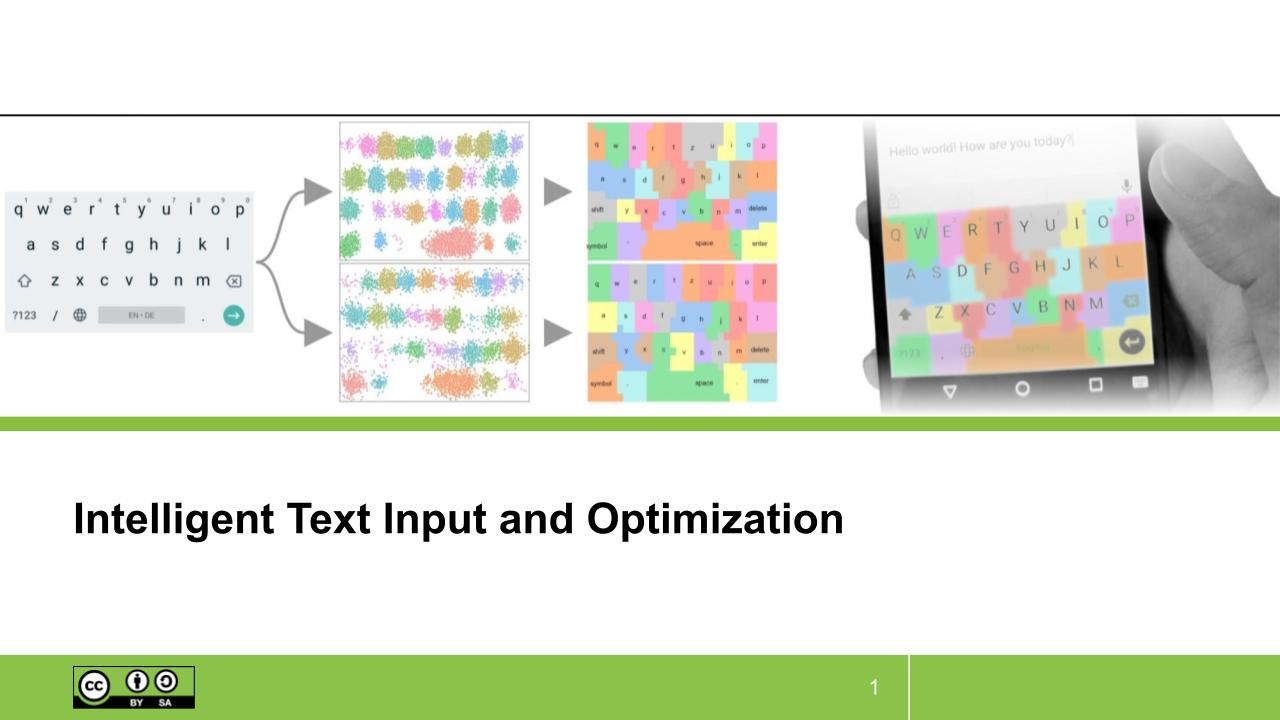

Part 04: Intelligent Text Input and Optimization

This module demonstrates how computational methods can be used to personalize and adapt user interfaces to individual users, based on observed user behavior. Building on this, we further introduce methods to infer user intention from such personalized behavior models. In particular, we introduce a probabilistic perspective on these aspects and take modern "smart" mobile keyboards as a concrete application context. Within this context, we particularly examine two problems to be addressed with computational methods, which cover both design time and use: First, we apply computational optimization to the classic problem of keyboard layouting, which involves basic HCI models to inform navigation of a formalized design space towards an "optimal" design solution. Second, we apply probabilistic modeling and inference to keyboard decoding, that is, working out what a user intended to type, even if they do so very sloppily. Here, we will cover a range of modern keyboard features, including key penalization, auto-correction, and word prediction.

Instructors:

Albrecht Schmidt

Albrecht Schmidt is a full professor for Human Centered Ubiquitous Media in the Computer Science Department at LMU Munich. The focus of his work is on novel user interfaces to enhance and amplify human cognition. He is working on interaction techniques and intelligent interactive systems in the context of ubiquitous computing. In 2018 he was elected to the ACM CHI Academy.

Sven Mayer

Sven Mayer is an assistant professor for HCI at the LMU Munich. Prior to that, he was a postdoctoral researcher at the Carnegie Mellon University in the Future Interfaces Group and received his Ph.D. from the University of Stuttgart in 2018. He uses machine learning tools to design, build, and evaluate future human-centered interfaces in his research. This allows him to focus on hand- and body-aware interactions in contexts, such as mobile scenarios, augmented and virtual reality, and large displays.

Daniel Buschek

Daniel Buschek leads a junior research group at the intersection of Human-Computer Interaction and Machine Learning / Artificial Intelligence at the University of Bayreuth, Germany. Previously, he worked at the Media Informatics Group at LMU Munich, where he had also completed his doctoral studies, including research stays at the University of Glasgow, UK, and Aalto University, Helsinki. In his research, he combines HCI and AI to create user interfaces that enable people to use digital technology in more effective, efficient, expressive, explainable, and secure ways.